John White’s Illegitimate 2013-to-2014 LEAP/iLEAP Comparisons

Whenever Louisiana State Superintendent John White gets his hands on test scores, there will be problems.

He withheld release of the 2014 Louisiana Educational Assessment Program (LEAP) and Integrated Louisiana Educational Assessment Program (iLEAP) scores on Friday, May 16, 2014, without explanation.

Today, May 27, 2014, he defended his decision before district superintendents by telling them that compared to previous years, this year’s scores were not late.

Districts across the state came to expect to deliver LEAP and iLEAP scores to parents on Friday, May 16, 2014 because that was the Friday in May in which LEAP and iLEAP scores are usually released. White even acknowledged in a letter that the scores would be released later than in previous years:

In recent years, the Department has released these results May 17 or 18.

Consider those two dates more closely:

Friday, May 17, 2013.

Friday, May 18, 2012.

Thus, the commonly-held expectation that LEAP and iLEAP scores would be released on Friday, May 16, 2014, was logical, and White knew it.

In that letter, White makes another statement, one that solidifies the reality that any comparison of 2014 LEAP and iLEAP scores with those of prior years is useless:

An important step [toward connecting Common Core and its PARCC assessment] has been the one-time LEAP and iLEAP tests aligned to those new expectations (Common Core). [Emphasis added.]

I won’t pretend for a minute that White has actually carried out any sophisticated psychometric procedures to develop tests that are “aligned” with the Common Core he refuses to call by name (instead resorting to the vanilla term, “new expectations”). However, he has acknowledged that the LEAP and iLEAP tests used in 2014 are different tests that those in previous years.

Thus, for White to declare in this press release that scores “remain steady” is grossly misleading.

The tests might share the same name, but from 2013 to 2014, the tests are distinctly different and therefore, results from 2013 to 2014 are not open to useful comparison.

In order to compare two different tests, one must calibrate the newer test with the older, and that takes data in the form of piloting the test– which White has not done– and it takes time– certainly more that the Friday-to-Tuesday that White scraped up in the form of a score release delay from May 16 to May 20.

As it stands, a student’s 2013 LEAP or iLEAP score– though it be exactly the same number– does not automatically hold the same meaning as the very same number on the 2014 LEAP or iLEAP test.

There is more that complicates the issue:

There is no evidence that the scoring categories from 2013 to 2014 align, even if White did use the same numeric cutoff scores for each category.

White needs to publicly release the cutoff scores that the LDOE under his direction actually used for 2013 and 2014 LEAP and iLEAP tests.

How about some transparency??

Altering cutoff criteria also “muddies” (yes, I wrote it) comparison of one set of scores with another. However, altering category cutoff scores enables the one setting the cutoffs to shape score results according to his own purposes.

As for students and schools, they bear the public brunt of it, even if the consequences are supposed “relaxed” by the cutoff score manipulator.

Based upon the bru-ha-ha (see here and here and here) allegedly coming from the Louisiana Department of Education (LDOE) in the days between LDOE’s having the 2014 LEAP and iLEAP scores and publicly releasing said scores, I’m thinking that there were numerous scenarios toyed with regarding those LEAP and iLEAP category cutoffs.

So, to parents and administrators who are chagrined at comparisons between 2013 and 2014 LEAP and iLEAP results: Know that the comparisons are fiction.

You might as well attribute the results to pixie dust and fairies.

Do not be disturbed by movement up or down in percentile rankings within the 2014 LEAP and iLEAP scores, either. Movement in such rankings is not clearly connected to set criteria for passing or failing. Theoretically, all students in all Louisiana schools could pass LEAP and iLEAP, and some districts would have to be ranked lower than others. When averages are used, someone must be below average.

Of course, the reverse is also true: All students could theoretically fail a test based upon some set criteria, and still, some score would be highest and therefore able to be termed, “the 99th percentile.” All that this means is that the score is higher than that of 99 percent of other test takers– even if deemed a non-passing score by some set criteria.

In the current situation, for stakeholders to get stuck on, “We were a higher percentile ranking last year” is to fall into the trap of comparing a 2014 PARCC-like LEAP or iLEAP to a 2013 non-PARCC-like LEAP or iLEAP.

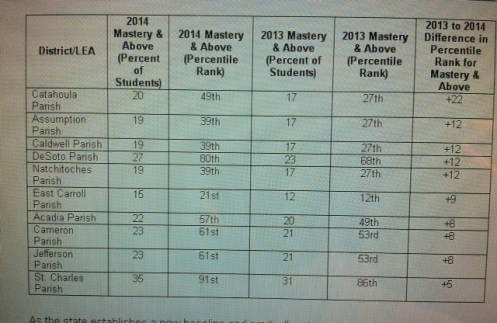

The May 27, 2014, LDOE press release includes percentile rank “comparisons” that are nothing more than illusion. First of all, the comparisons from 2013 to 2014 are useless. However, not only is White attempting to promote them as useful; he is attempting to draw attention away from what looks like small percentage “gains” to more “dramatic” “changes” in percentile rank. He needs to showcase some “sensational” numbers. Whether or not such numbers are fact-based is irrelevant to White. Consider this press release offering:

Notice that Catahoula Parish “jumped” 22 percentile ranks by “moving from “17 percent mastery and above in 2013” to “20 percent in 2014.” The move is not a legitimate comparison as noted previously in this post; however, if it were, it would be only three percentage points. Somehow (a mystery to those outside of LDOE) those three points “produced” the “sensational 22-percentile rank gain.”

Notice also that East Carroll also “gained” three percentage points, from 12 percent to 15 percent keep in mind, the comparison is a fraud), yet East Carroll only “moves” 9 percentile rankings “from 2013 to 2014.”

So, what is the value of moving “up” three percentage points??

Well, it just depends. Pixie dust and fairies.

Let us now turn our attention toward PARCC:

In his May 27, 2014, press release, White is careful to not call the test of the “new expectations” (i.e., Common Core) what it is: the PARCC test, developed by “a group of states.” (Near the end of the release, he/LDOE do allude to “resources released” by “Louisiana and PARCC.”)

If Louisiana can reshape its 2014 LEAP and iLEAP into a “one-time LEAP and iLEAP aligned to those expectations,” then why are we purchasing PARCC at the still-advertised, estimated price of $29.50 per student?

Why not use the already-in-the-works, “PARCC-like” LEAP and iLEAP? The decision apparently belongs to LDOE. Louisiana has no contract to purchase PARCC.

If PARCC is used next year, which appears to be the direction in which White is taking Louisiana education, then those 2014-15 scores will not be comparable to either the 2013 LEAP/iLEAP or the 2014 “PARCC-like” LEAP/iLEAP.

Steady, up, down, no one will really know.

One final point to this post:

On May 27, 2014, reporter Danielle Dreilinger of nola.com cited me in such a manner as to make it sound as though I bought White’s story of “steady” scores from 2013 to 2014.

Dreilinger, report my position clearly this time:

White’s reported “steady” scores are an illusion.

And I agree with former LDOE employee Jason France’s admonition that those LDOE individuals being pressured into unethical practices in order to “shape” test results need to come forward before the ax falls.

That is like deja vu with what is going on in Tennessee! Kevin Huffman (also a Chief fire Change) is putting pixie dust on TCAP scores here… messing with cut scores and aligning TCAP questions with Common Core supposedly. The test results were not ready from the state when they were supposed to be (everyone is wondering if the scores show that his reforms and Common Core aren’t working). Districts found out the day the quick scores were due to be released to them that there would be a 10 day delay (announced conveniently after a huge education conference in Nashville was finished and after Arne Duncan was gone) so districts couldn’t get final report cards out in time. So, Huffman said districts could apply for waivers to exempt them from including TCAP in student grades. Huffman granted over a hundred waivers to districts BUT he didn’t have the legal authority to do so. It will be interesting to see how he wiggles his way out of this hot mess! People are awful furious at him right now and with the Governor for appointing him. Even the news media isn’t painting a pretty picture of him like they usually do. Hopefully, Huffman will be fired or will resign soon.

Thanks for your awesome blog, Mercedes! Sometimes it feels like we are living in parallel universes.

Gotta love the use of “percentile ranks” in this reporting of the scores. Besides what you pointed out, using “percentile ranks” yields a wider spread of scores (from 1 to 99) the the actual rank of the districts (1 to 72) making any movement, however irrelevant, seem even bigger. This gave the propagandists numbers about 40 percent bigger to tout as gains.

Unfortunately, the mainstream media picks up and regurgitates these numbers as if they have profound meaning. My local TV news parroted those percentile rank changes last night without giving any context. Thanks for keeping an eye on this.

Thanks, Mercedes. As a Louisiana public school teacher, I am no longer surprised or saddened by the charade John White creates each year with the release of “test scores.” This is war. I am saddened that individuals in LDOE are apparently being pressured to be complicit in this chicanery. John White’s silence on these accusations as reported by Tom Aswell and Jason France speaks volumes.

Manipulating the tests and results is nothing new for the fake education reformers offering us more proof that educating children has nothing to do with NCLB, Race to the Top or Common Core. Instead, the fake education reform movement that dates back to the late 1970s with the Walton families on-going voucher war on public schools is nothing but a political/religious movement that doesn’t care about public education and children. This is about power and turning over taxpayer money to the private sector with no oversight or transparency that will allow corporations to commit fraud to boost profits making the wealthy richer than Midas ever was—the same thing that corporate America is doing to their schools also caused the 2007-08 global financial crises.

Arne Duncan’s Department of Education did the same thing with the 2012 PISA test so they could come up with an average comparison with other countries that would artificially make the US look horrible when compared to other countries while leaving out the context. The fake education reformers hate the people discovering all the facts that lead to the truth and not their lies.

The Economic Policy Institute concluded: “The U.S. administration of the most recent (2012) international (PISA) test resulted in students from the most disadvantaged schools being over-represented in the overall U.S. test-taker sample. This error further depressed the reported average U.S. test score.”

Then the conclusion goes on: “U.S. students from advantaged social class backgrounds (students who do not live in poverty) perform better relative to their social class peers in the top-scoring countries of Finland and Canada …”

In fact, the US would look bad in a comparison even if the testing wasn’t manipulated became of the large number of children who live in poverty. Poverty is the anchor that weights the average test score and drags it down. The more poverty a country has, the lower that average will be.

For instance, Finland ranks very high in the PISA comparison and has less than a 5% poverty rate, and most European countries that have poverty rates of 15% or less. In fact, the U.S. has more children living in poverty than the total populations of many of these countries the U.S. is compared to. Finland has a population of five million while the U.S. has 16+ million children living in poverty.

You will take note that the fake education reformers never bring up poverty and how that impacts the results of any standardized tests while nothing of significance has been done to deal with the causes of poverty in the U.S.

The US has a poverty rate of 23% and many of the children living in poverty are concentrated in urban areas where schools have poverty rates of 70% or higher and there isn’t one country in the developed world even has schools with poverty rates that high.

I wrote about this deliberate fraud here: http://crazynormaltheclassroomexpose.com/2014/05/19/the-fake-education-reformers-smoking-gun-that-leads-from-arne-duncan-to-the-white-house/

The best way to deal with the causes of poverty is early childhood education programs—something that the Obama administration plans to deal with in 2015 when they ask Congress for $75 billion to fund expanding the program for a ten year period. Why didn’t Obama start with this program first? he is he waiting until 2015 when he is almost out of office and the odds are that a Congress dominated by the GOP will vote no for this proposal? Was this deliberate knowing what the results would be?

And there is this report from Education Week that proves how valuable early childhood education is:

“In linkages to early education, states made the biggest movement in the establishment of school-readiness definitions. In 2013, just over half the states (26) have such definitions, continuing an upward trajectory from 19 states in 2009 and 22 in 2011.”

http://www.edweek.org/ew/articles/2013/01/10/16sos.h32.html

Instead of NCLB, Race to the Top and the Machiavellian Common Core testing regime that’s right out of Mao’s Cultural Revoluiton playbook, Bush and then Obama should have focused on the factors reported by Ed Week.org starting with high quality early childhood education programs in every state.

Early childhood education and school readiness is essential to preparing our children to

succeed in an increasingly competitive global economy. Compared to other countries,

however, the United States lags far behind on preschool, trailing a number of other

countries in enrollment, investment, and quality.

the United States ranks behind most of the other countries in the Organization for Economic Co-operation and Development, or OECD. We rank:

• 26th in preschool participation for 4-year-olds4

• 24th in preschool participation for 3-year-olds

• 22nd in the typical age that children begin early childhood-education programs

• 15th in teacher-to-child ratio in early childhood-education programs

• 21st in total investment in early childhood education relative to country wealth

Heard through the “Grapevine” , the scores were so low they had to drop the bar that is why scores were not released. I wonder what would happen, if a teacher tells John White her report will be turned in on May 16 but turns it in on May 20.

Here’s the thing: It should be possible to produce some decent (albeit limited) comparison between the old iLeap and the new iLeap+PARCC. Presumably they used a batch of questions from the iLeap pool and a new batch.

What you do, if you’re not afraid of the comparison, is back the new out of the old in the analysis. If you’ve planned even halfway well this should be pretty straightforward and is standard procedure in refreshing any test. New questions are salted in and used. They are then backed out and the agency runs the numbers again on the tests and any subtests they want to make comparable. If the new questions skew the numbers so that the comparison is muddled they adjust the weighting. (And weed out questions that turn out to be really bad.)

Happens all the time. Is a necessary part of test construction at this level. There is no reason we shouldn’t be able to look at the aggregated raw data and redo that work ourselves.

Except, of course, that the LDOE doesn’t let anyone look at data but themselves. But they could if they actually cared about developing as valid an instrument as possible and wanted people to trust them.

But…..

No, what we should do is ban standardized testing and trust teachers to do what we expect them to do. That’s why they do in Finland.

What they’ve done in Finland is refuse to use standardized tests as ways to rank or grade students, teachers and schools. They still use standardized tests as the valuable diagnostic tool they can be. The way we use the tool is criminal. It’s not the tool. It’s the way the tool is used. Always.

well, I haven’t read White’s foolishness about the recent tests and don’t plan to. I did see a newspaper blurb about percentile ranks and couldn’t figure out what the reporter was talking about. I think Mr. Basset’s comments clears that up. Percentile ranks using Louisiana districts — that had to be a Jessica Baghian idea or someone else who can add and subtract, but not multiply and divide.

The cut scores for 2014 performance levels were posted on the LDoE site recently.

This year’s tests were the same tests as were administered last year. Many of last year’s items were being field tested, so they didn’t count in the scoring. Since there is probably a break down of how many questions were asked in what general categories for 2013 or 2014, or both, it seems that a comparison of this year’s strand data could probably guide in a rough comparison.

Essentially, the LDoE was to curve the scores so that “the same ability on the 2013 tests would earn the same performance level on the 2014 test.” There was some snafu though. I just haven’t had a chance to determine what it could have been.

Seems maybe a few districts could pool their data, since no one can get a researcher file from the LDoE. Strip the student and school level identifiers (since some schools are so small you could have FERPA issues) and it wouldn’t be a problem.

It’d be nice to uncover a bit of ammo for Bobby to shoot White with (and himself in the foot).

vote for crazy crawfish

To say that 2013 and 2014 tests are “the same” because PARCC-like items were being “piloted” is a stretch that requires a sophisticated, detailed report– complete with item analyses on the “piloted” items– to begin to justify and explain.

I dare White to produce such a report and evidence that it was produced prior to this year’s LEAP/iLEAP testing.

Meanwhile, I’ll watch the sky for those flying pigs.