Profound Measurement Error in Louisiana “PARCC” Scale Scores

On October 12, 2015, the following email from Louisiana superintendent John White was forwarded to district superintendents, accompanied with 12 files detailing raw-score-to-scale-score conversions for the tests given to Louisiana students in spring 2015 in grades 3 through 8 in ELA and math:

Attached please find charts for converting raw scores to scale scores for 2015 grade 3-8 English and math state assessments.

These are the same conversion tables as will be used in other states where these forms are active. Scale scores and cut scores derived from these conversions will be comparable with those in other states, provided that BESE approves comparable cut scores.

John

The question of whether the tests Louisiana students took are exactly the same test that all PARCC states have taken, as White is quoted saying in a September 29, 2015, article in The Lens, is not settled by the fact that in The Lens article, White implies that all PARCC states used “the same form”–

Students across Louisiana “took the exact same form as did kids across the country,” White said. “Same questions. Same order. Nothing different.” [Emphasis added.]

–but in the email excerpt above, White includes a disclaimer about the scale scores applying “in other states where these forms are active.”

To date, White has offered the public no formal documentation to substantiate his claim that Louisiana students “took the exact same form as kids across the country.” But let’s just set such presumption aside as we consider what White is offering by way of scale scores for those mystery tests.

Below are the 12 files for scale-score-to-raw-score conversion mentioned above:

SP_2015_ELA03_SSScore SP_2015_ELA04_SSScore SP_2015_ELA05_SSScore SP_2015_ELA06_SSScore SP_2015_ELA07_SSScore SP_2015_ELA08_SSScore SP_2015_MAT03_SSScore SP_2015_MAT04_SSScore SP_2015_MAT05_SSScore SP_2015_MAT06_SSScore SP_2015_MAT07_SSScore SP_2015_MAT08_SSScore

Based upon what White presents in the above conversion files, these supposed “PARCC” scale scores are far too imprecise to be used in high-stakes decision making. The issue comes when one considers the error of measurement reported for each scale score.

PARCC or no PARCC, these scale scores have a lot of error.

A lot— which means White is doing the PARCC consortium no favors by implying these conversion tables represent what is coming from the PARCC consortium– and what might represent the degree of measurement error in Pearson-PARCC tests.

In each of the 12 files above, a raw score is converted into a scale score. Next to each conversion is the standard error of measurement for the resulting scale score (labeled CSEM). This CSEM tells how imprecise a given scale score is likely to be based upon the bell-shaped curve (the normal distribution).

I don’t want to get too technical, but I do want readers to understand how worthless the scale scores are given the degree of measurement error.

The range of the scale scores is from 650 to 850. The measurement error–CSEM– associated with each of the 201 possible scale scores ranges from 6 scale score points to 20 scale score points, with more error tending to be associated with the lowest and highest scale scores.

Louisiana “PARCC” scale scores are highly unstable.

For example, on the 8th grade math test, a scale score of 713 is likely to be off by plus or minus 11.7 points (12 points, rounded up) according to corresponding CSEM in the math 08 file included above. CSEM is a plus-or-minus number.

Here is what the CSEM of + 12 means:

The math 08 scale score of 713 is expected to vary + 12 points 68 percent of the time the same student takes the same test– and the 713 is expected to vary + 24 points (12 x 2) 95 percent of the time that the same student takes the same test.

Thus, the 713 that the state assigns this student as a scale score in grade 8 math has so much imprecision that the student’s scale score could be as low as 701 or as high as 725 in 68 out of 100 times if the same student took the same test. By extension, the 713 that the state assigns this student as a scale score in grade 8 math has so much imprecision that the student’s scale score could be as low as 689 and as high as 737 in 95 out of 100 times if the same student took the same test.

It’s that bad.

Let’s consider the average raw scores released by the state on Friday, October 09, 2015, and how these convert into scale scores and are influenced by CSEM imprecision. Below is the table of raw score averages:

ELA:

The ELA 03 statewide mean raw score is 37 out of 100. The scale score for 37 is 739, with a CSEM imprecision of + 9.5 points. Thus, the 739 could realistically be as low as 729 or as high as 749 in 68 out of 100 times that the same student takes the same test. Moreover, the 739 could realistically be as low as 719 or as high as 759 in 95 out of 100 times that the same student takes the same test.

The ELA 04 statewide mean raw score is 43 out of 104. The scale score for 43 is 743, with a CSEM imprecision of + 8.1 points. Thus, the 743 could realistically be as low as 735 or as high as 751 in 68 out of 100 times that the same student takes the same test. Moreover, the 743 could realistically be as low as 727 or as high as 759 in 95 out of 100 times that the same student takes the same test.

The ELA 05 statewide mean raw score is 38 out of 104. The scale score for 38 is 739, with a CSEM imprecision of + 7.3 points. Thus, the 739 could realistically be as low as 732 or as high as 746 in 68 out of 100 times that the same student takes the same test. Moreover, the 739 could realistically be as low as 725 or as high as 753 in 95 out of 100 times that the same student takes the same test.

The ELA 06 statewide mean raw score is 56 out of 137. The scale score for 56 is 741, with a CSEM imprecision of + 6.2 points. Thus, the 741 could realistically be as low as 735 or as high as 747 in 68 out of 100 times that the same student takes the same test. Moreover, the 741 could realistically be as low as 729 or as high as 753 in 95 out of 100 times that the same student takes the same test.

The ELA 07 statewide mean raw score is 50 out of 135. The scale score for 50 is 739, with a CSEM imprecision of + 7.5 points. Thus, the 739 could realistically be as low as 731 or as high as 747 in 68 out of 100 times that the same student takes the same test. Moreover, the 739 could realistically be as low as 723 or as high as 755 in 95 out of 100 times that the same student takes the same test.

The ELA 08 statewide mean raw score is 64 out of 137. The scale score for 64 is 740, with a CSEM imprecision of + 7.3 points. Thus, the 740 could realistically be as low as 733 or as high as 747 in 68 out of 100 times that the same student takes the same test. Moreover, the 740 could realistically be as low as 726 or as high as 754 in 95 out of 100 times that the same student takes the same test.

Math:

The math 03 statewide mean raw score is 34 out of 81. The scale score for 34 is 741, with a CSEM imprecision of + 7.3 points. Thus, the 741 could realistically be as low as 734 or as high as 748 in 68 out of 100 times that the same student takes the same test. Moreover, the 741 could realistically be as low as 727 or as high as 755 in 95 out of 100 times that the same student takes the same test.

The math 04 statewide mean raw score is 37 out of 82. The scale score for 37 is 737, with a CSEM imprecision of + 6.8 points. Thus, the 737 could realistically be as low as 730 or as high as 744 in 68 out of 100 times that the same student takes the same test. Moreover, the 737 could realistically be as low as 723 or as high as 751 in 95 out of 100 times that the same student takes the same test.

The math 05 statewide mean raw score is 34 out of 82. The scale score for 34 is 736, with a CSEM imprecision of + 6.6 points. Thus, the 736 could realistically be as low as 729 or as high as 743 in 68 out of 100 times that the same student takes the same test. Moreover, the 736 could realistically be as low as 722 or as high as 750 in 95 out of 100 times that the same student takes the same test.

The math 06 statewide mean raw score is 27 out of 82. The scale score for 27 is 735, with a CSEM imprecision of + 6.8 points. Thus, the 735 could realistically be as low as 728 or as high as 742 in 68 out of 100 times that the same student takes the same test. Moreover, the 735 could realistically be as low as 721 or as high as 749 in 95 out of 100 times that the same student takes the same test.

The math 07 statewide mean raw score is 24 out of 82. The scale score for 24 is 735, with a CSEM imprecision of + 6.5 points. Thus, the 735 could realistically be as low as 728 or as high as 742 in 68 out of 100 times that the same student takes the same test. Moreover, the 735 could realistically be as low as 721 or as high as 749 in 95 out of 100 times that the same student takes the same test.

Finally, the math 08 statewide mean raw score is 22 out of 80. The scale score for 22 is 740, with a CSEM imprecision of + 9.5 points. Thus, the 740 could realistically be as low as 730 or as high as 750 in 68 out of 100 times that the same student takes the same test. Moreover, the 740 could realistically be as low as 720 or as high as 750 in 95 out of 100 times that the same student takes the same test.

The imprecision in these scale scores is profound, and any presupposed usage of these scale scores for measuring “growth” is nonsense at best and destructive at worst.

The instability of the scale scores is a reliability issue– it concerns the inability for the same student (with the same, unaltered knowledge base) to take the same test and receive the same score. In reality, there is some measurement error to all standardized test scores, but the amount should be low so that the resulting hypothetical 100-times-testing score ranges are narrow.

The high degree of measurement error in White’s “PARCC” scale scores makes them useless. No setting of any particular cut score levels can save these scale scores from their measurement error.

If the cut scores are set at 700, 725, 750, and 800, then the same student taking the same test 100 times could realistically have scale scores that overlap multiple cut scores, as noted in the 95-out-of-100-test-times examples above.

The Louisiana “PARCC” scale scores are a nonsense venture.

Wonder if the PARCC consortium will publicly claim Louisiana scale scores and their associated measurement errors as its own.

_____________________________________________________________

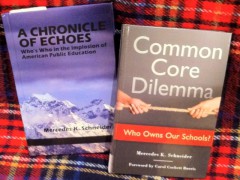

Schneider is a southern Louisiana native, career teacher, trained researcher, and author of the ed reform whistle blower, A Chronicle of Echoes: Who’s Who In the Implosion of American Public Education.

She also has a second book, Common Core Dilemma: Who Owns Our Schools?, published on June 12, 2015.

Don’t care to buy from Amazon? Purchase my books from Powell’s City of Books instead.

“Thus, the 739 could realistically be as low as 731 or as high as 747 in 68 out of 100 times that the same student takes the same test. Moreover, the 739 could realistically be as low as 723 or as high as 755 in 95 out of 100 times that the same student takes the same test.”

I really don’t get it. How could a score vary as much as +/- 8 points 68% of the time but vary more +/- 16 points 95% of the time. That would automatically mean that the score varied by at least +/- 8 points 95% of the time. Should that be the opposite–+/- 16 points at 68% and +/- 8 points at 95%?

The results of the 100 test times form a normal distribution in which 68 results fall within one standard deviation (defined by the std error of measurement) and 95 fall within two SDs.

2old2tch has a valid point. The standard error, as one standard deviation form the mean, indicates that on 68% of the occasions that the same student takes the same test the score will be in the range mean +/- one standard error. So only on 32% of the occasions will the score be outside this interval, and only on 5% of the occasions will the score be outside the mean +/- two standard errors.

This is more of a problem for an individual score than for the average score from a class of say 25 students, where the standard error is reduced by a factor of 5 (being the square root of 25), and it is the class average which is used in the teacher evaluation. (This doesn’t make me think that the VAM is any good!)

Thank you, Mercedes, for bringing up this issue. It’s difficult to explain statistical error to most people in non-technical terms, but that error is SO important in understanding numerical data. Why isn’t this taught in high school instead of ‘factoring polynomials’?

Nothing irritates me more than ‘ranking’ which doesn’t take into account this error. Unless one group or individual deviates from another by AT LEAST one standard deviation (and if important consequences result, like a school closing, a bonus, or a firing, I would say at least two, maybe three), the ranking is meaningless.

In a court, a person is supposed to be ‘innocent until proven guilty beyond a reasonable doubt’. What is a ‘reasonable doubt’? I don’t think one standard deviation is enough (32% doubt). I’m not even sure two is (5% of the time you will condemn an innocent person, or fire an innocent teacher). There is no absolute certainty, but I might go with three, knowing I’d only be firing the wrong person once in a hundred times. But, what if you were that one?